AI facing reality

Using AI to drive cost savings

The AI hype is all around us and yes, AI is starting to drive data center management.

The DCIM (Data Center Infrastructure Management) is an important and integral part of the DC providing dashboard-delivered analytics for data center monitoring, asset management, and capacity planning. It helps simplify the complexity of hybrid environments, enhance productivity, and increase resource efficiency. DCIM monitors the health of the data center with power distribution metering, thresholds, and alerts, and it manages IT assets with auto-discovery tools.

DCIM’s need a vast array of sensors to collect the information and these developments are a very good step is ensuring better use of power and resources. But would it not be great if you could predict the future temperature and proactively react ?

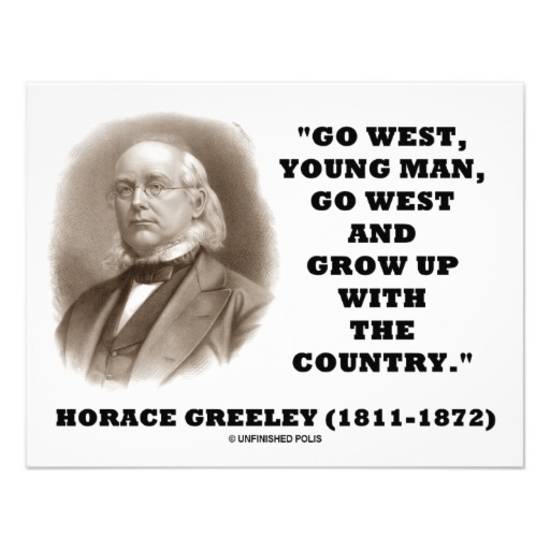

Google has shown that they have able to reduce the amount of energy used for cooling with 40% through the use of predictive algorithms within the Google DeepMind programme. They tackled 3 issues:

1. the non-linear way in which different equipment reacts to each other

2. the influence of external changes – namely the weather

3. the influence of the specific architecture and environment of each individual DC

Especially the first two aspects are dynamic and using historical data to predict and proactively control your cooling is definitely the way to go, given the large saving Google shows.

What I do wonder then is: given a legacy site, you can install more sensors, you can install a DCIM and work on predictive algorithms but was the design of the DC such that you can precisely control what you need to, to achieve the savings.

Ah, nothing like reality to dampen a good idea.